Today, we are taking a look at the Flan Collection of models by Google Research. The goal of Flan is to examine and release a large collection of tasks, templates, and methods for instruction tuning, which is an approach for finetuning language models on a collection of NLP tasks formatted with instructions.

So, let’s take a look at instruction tuning and Flan.

Instruction tuning for Language Models

Language models have come a long way in recent years. They can now process instructions, often on tasks they have never encountered before, thanks to the innovative approach of "instruction tuning."

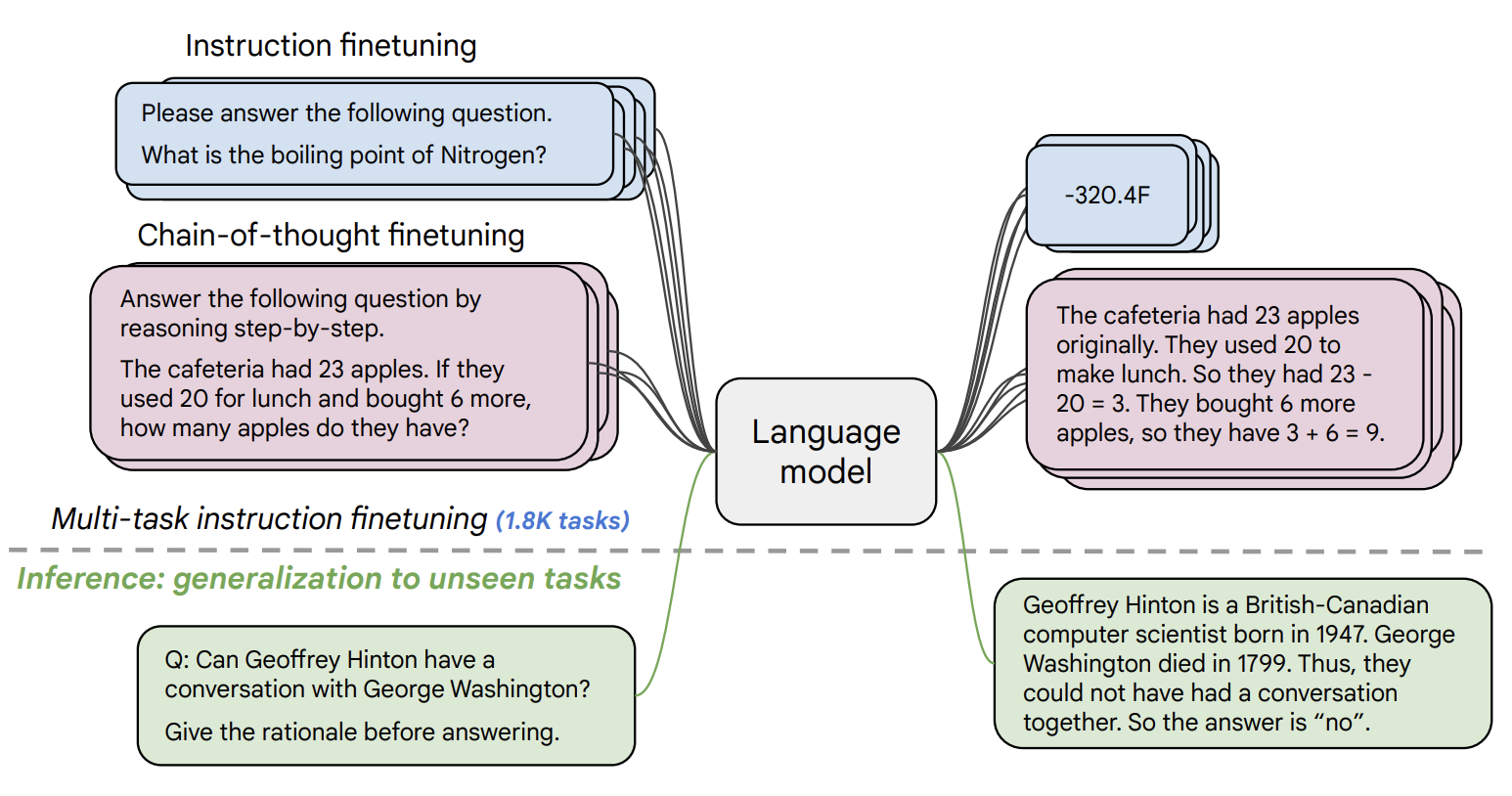

The idea of instruction tuning, which is depicted on the figure, is to fine-tune a large language model on a set of tasks that come with instructions, such as "Please answer the following question. What is the boiling point of Nitrogen?".

For example, the latest FLAN models introduced by Google research (see above), were fine-tuned on over 1.8 thousand tasks. During inference, these models are able to generalise to new, unseen tasks.

A number of methods for instruction tuning have been recently proposed, including FLAN, T0, Super-Natural Instructions, MetaICL, and InstructGPT. However, the data that drives these breakthroughs has remained largely inaccessible to the wider research community.

The paper we are looking at today aims to address this issue. The authors examine and release a larger and more comprehensive publicly available collection of tasks, templates, and methods for instruction tuning, aimed at advancing the ability of the research community to analyze and improve instruction tuning methods. The collection was first used in Flan-T5 and Flan-PaLM, with the latter achieving significant improvements over PaLM.

To give you some more context about the Flan models, they were also recently introduced by Google, but in another paper. There are two main model types. Flan-T5 is an encoder-decoder model similar to T5, while Flan-PaLM is a decoder-only language model, similar to PaLM and GPT-3. Flan-based models achieve higher performance than previous model iterations for the same number of parameters. And the best part is that they are available to download from the HuggingFace hub.

Results

The results showed that training models on the Flan Collection of tasks led to improved performance over comparable public collections on all evaluated benchmarks. For example, there was a 3% or more improvement on the 57 tasks in the Massive Multitask Language Understanding (MMLU) evaluation suite and an 8% improvement on BigBench Hard (BBH).

The researchers also explore how Flan-T5 models compare to T5 models for fine-tuning. Three scenarios were compared: directly fine-tuning T5 on the target task, using Flan-T5 without further fine-tuning, and fine-tuning Flan-T5 on the target task. Results showed that fine-tuning Flan-T5 led to improved performance compared to directly fine-tuning T5. In some cases, Flan-T5 even outperformed T5 without further fine-tuning.

The results suggest that using Flan-T5 as a starting point provides the benefit of faster and cheaper training, converging more quickly and reaching higher accuracy levels than T5 fine-tuning. This implies that less task-specific training data may be required to achieve comparable or better results.

The use of instruction-tuned models like Flan-T5 in single-task fine-tuning can also bring significant energy efficiency benefits to the NLP community, as pre-training and instruction fine-tuning may be expensive but are a one-time cost. These models offer a solution in reducing the number of fine-tuning steps needed for the same or better performance.

Conclusion

The Flan Collection of tasks represents a significant step forward for instruction tuning. The release of this comprehensive collection of tasks, templates, and methods has opened up new avenues for exploration and innovation, and has paved the way for even greater breakthroughs in the future. The results of the study suggest that this new collection serves as a more performant starting point for researchers and practitioners interested in both generalizing to new instructions or fine-tuning on a single new task.

Thanks for reading! If you are looking for state-of-the-art expertise in Natural Language Processing, you should check out our services at The Global NLP Lab.