Large language models, with 100 billion parameters or more, are able to achieve strong results on a wide range of tasks even with little or no training examples. However, even the largest language models can still struggle with certain multi-step reasoning tasks, such as math word problems and commonsense reasoning.

Well, researchers at Google came up with a method called chain of thought prompting that aims to improve the reasoning abilities of language models. This method enables the model to decompose multi-step problems into intermediate steps, allowing the model to solve complex reasoning problems that are not solvable with standard prompting methods.

In today's article, we are going to take a look at chain of thought prompting, discussing the benefits of the approach, as well as the experimental results. So let's get started!

We also published this article in a video format, you can watch it here.

What is chain of thought prompting?

Now, you may be wondering - how does chain of thought prompting differ from standard prompting?

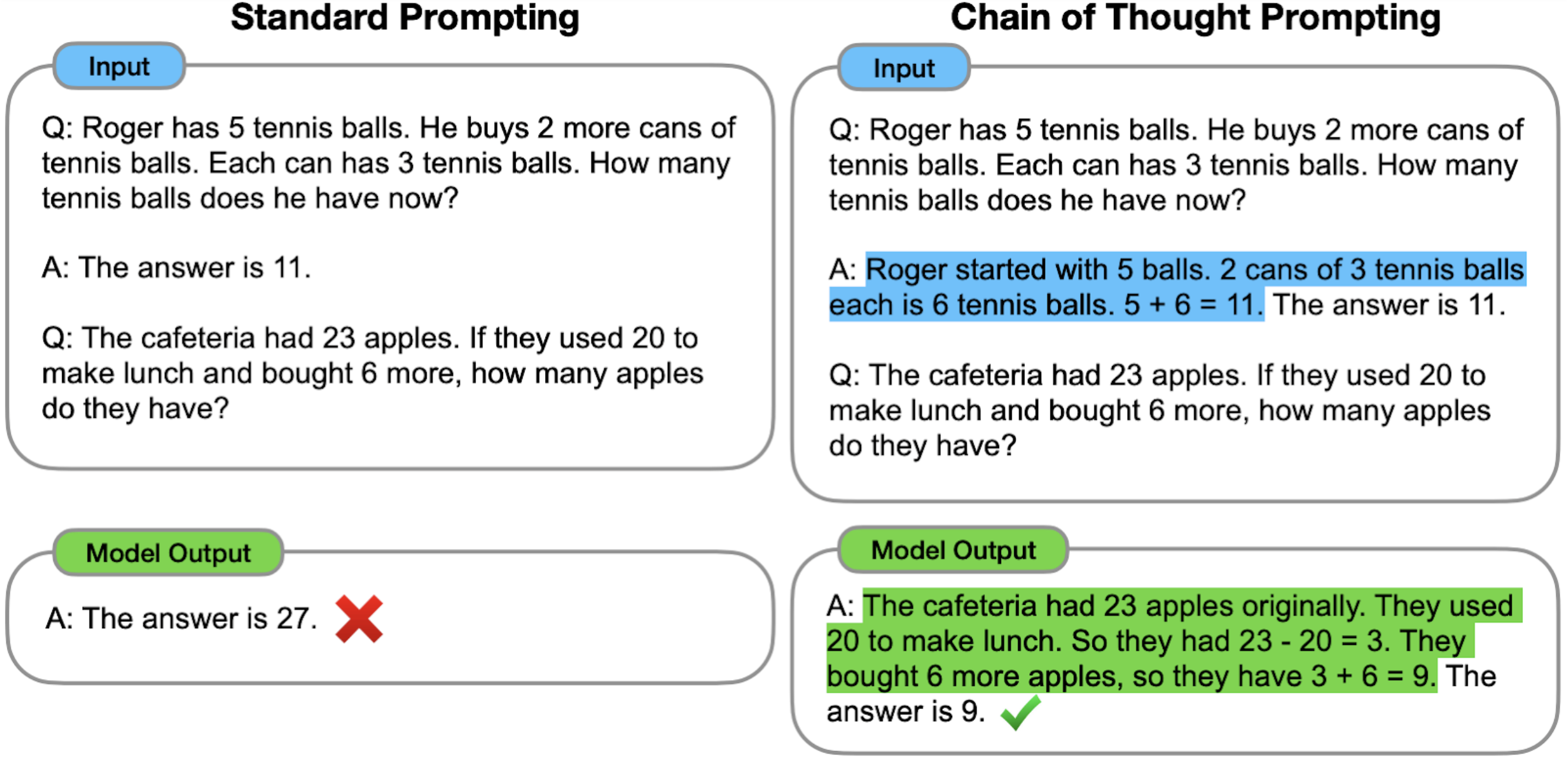

With standard prompting, displayed on the left part of the figure above, the model is given examples of input-output pairs (formatted as questions and answers) before being asked to predict the answer for a test-time example. This method has been popularized by the GPT-3 model and has shown to be effective in a range of NLP tasks.

With chain of thought prompting, shown on the right, the model is prompted to produce intermediate reasoning steps before giving the final answer to a multi-step problem. This is achieved by expanding the examples in the prompt to contain the detailed reasoning process, such as "Roger started with 5 balls, 2 cans of 3 tennis balls each 6 tennis balls. The answer is 11." in the example. When presented with an unseen question, the LM is required to produce the reasoning steps before outputting the final answer. The idea here is that a model-generated chain of thought would mimic an intuitive thought process when working through a multi-step reasoning problem.

Chain-of-thought is a simple and intuitive technique that can be used with any off-the-shelf language model. Some of the benefits of this prompting approach are that it forces the model to decompose the problem into several reasoning steps, allocating more computation to them, before producing the final answer. Furthermore, chain-of-thought is easily interpretable by humans, and is applicable to a wide range of problems, such as commonsense reasoning, without the need for a large training dataset or modifying the model's weights.

How does chain of prompting work?

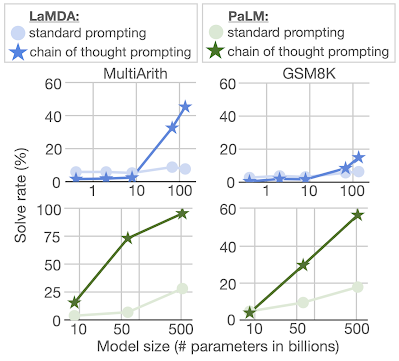

So how does chain of thought prompting perform in practice? The researchers tested it on a variety of reasoning tasks, including arithmetic, geometry, logic, and even some forms of commonsense reasoning. One area where language models typically struggle is with arithmetic reasoning, or solving math word problems. The researchers tested their method on two benchmarks in this area: MultiArith and GSM8K. They evaluated both the LaMDA collection of language models ranging from 422M to 137B parameters, as well as the PaLM collection of language models ranging from 8B to 540B parameters.

They found that using standard prompting led to relatively flat scaling curves (see the figure), meaning that increasing the scale of the model did not substantially improve performance. However, when using chain of thought prompting, they found that increasing model scale led to improved performance that substantially outperformed the baseline.

But it's not just arithmetic reasoning where chain of thought prompting shows promise. The researchers also found that it improved performance on a variety of other reasoning tasks, including geometry, logic, and even some forms of commonsense reasoning. This is a significant development, as it allows language models to approach problem-solving in a more human-like manner, breaking down complex problems into intermediate steps that are solved individually.

Should I switch to chain of thought for my task and use case?

Now, you might be wondering: will switching to chain-of-thought lead to better performance for my task of interest? There are several aspects to consider.

The first aspect is the complexity of your target task. If your task involves complex multi-hop reasoning, chain-of-thought prompting might definitely help. Otherwise, for simple tasks, it might make little to no difference.

Second, are you planning to use a large language model to solve your task? As discussed previously, chain of thought seems to have the biggest effect when using language models larger than 100 billion parameters. If you are not planning to use such a model, switching to chain-of-thought won't probably make a difference.

Finally, if you're not able to get further gains on the task with standard prompting, even when using the largest language models, this might be an indicator that you need to try an alternative prompting method, such as chain-of-thought.

Conclusion

So there you have it - an overview of the chain of thought prompting. It's a really simple and effective method that improves the reasoning abilities of language models. We're looking forward to seeing how prompting methods evolve in the future.

If you are looking to start a project in NLP using Large Language Models and Prompting methods, and would like to get advice from an advanced team of experts with PhDs that have been working in the area for 5+ years, you should get in touch with us at The Global NLP Lab. Looking forward to talking to you!